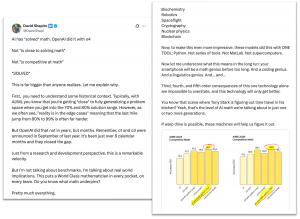

A post on X has gone viral with nearly 350,000 views making the startling claim that OpenAI has “solved” math. It further claims that this will lead to massive changes in math-based technologies – from biochemistry to nuclear physics – and could even open the floodgates for time travel and “warp drive” in “one or two more generations.” Is there any truth to this claim? The viral post comes in the wake of OpenAI announcing their two latest models – Open AI o3 and o4-mini – which they say are their “smartest models to date” and trained to think for longer before responding. In particular, o4-mini is optimised for “math, coding and visual tasks,” and has performed better than any other AI model in the American Invitational Mathematics Examination (AIME).

Is there any truth to this claim? The viral post comes in the wake of OpenAI announcing their two latest models – Open AI o3 and o4-mini – which they say are their “smartest models to date” and trained to think for longer before responding. In particular, o4-mini is optimised for “math, coding and visual tasks,” and has performed better than any other AI model in the American Invitational Mathematics Examination (AIME).

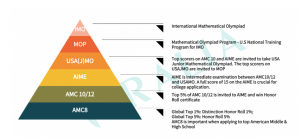

The AIME is a 3-hour test comprising 15 questions to be answered without the use of calculators and tools apart from pencils, erasers, rulers, and compasses. According to OpenAI, the o-4 mini had 92.7% accuracy when taking the test with the above conditions and 99.5% when using Python (a computer programming language that can be used to perform calculations and run complex mathematical functions) as a tool. The author of the post appears to be basing his claims on this 99.5% statistic – outright stating that this is an indicator of the o-4 mini “solving math.”

However, based on our research, the notion of math being solvable is far more complex than is represented in the claim post. For one, AIME is only one of many possible benchmarks of math ability and prowess and, is considered less challenging than both National and International Mathematical Olympiads. And, Math as a discipline is the study of broad range of methods, theories and theorems that need to be “proven” (using mathematical proofs) using deductive reasoning. That Math can be “solved” (or even that all mathematical statements can be proven) is something thought by many to be impossible.

And, Math as a discipline is the study of broad range of methods, theories and theorems that need to be “proven” (using mathematical proofs) using deductive reasoning. That Math can be “solved” (or even that all mathematical statements can be proven) is something thought by many to be impossible. The claim post has also been responded to by an AI Reasoning Research Lead at OpenAI, who emphasised that they “did not solve math” – pointing out that both o3 and o4-min are “still not great at writing proofs” and are “nowhere close” to winning gold at the International Mathematics Olympiad.

The claim post has also been responded to by an AI Reasoning Research Lead at OpenAI, who emphasised that they “did not solve math” – pointing out that both o3 and o4-min are “still not great at writing proofs” and are “nowhere close” to winning gold at the International Mathematics Olympiad.

The hyperbolic and inaccurate nature of the claim might stem from excitement over the progress demonstrated by OpenAI’s new models, with the advancements signalling new possibilities for AI models in general. However, the claim post’s insistence on the notion that AI has “solved math” (going as far as rejecting other less definitive statements in the post itself) appears to be inaccurate and misleading. We therefore give this claim a rating of false.

We therefore give this claim a rating of false.

This claim is an example of how hyperbolic language and exaggeration to catch attention or for emphasis can lead to misinformation spreading. When used in the context of unwieldy (and often rather intimidating) subjects such as mathematics, this is an even more concerning issue.

Being critical about why certain definitive or embellished words are used and taking the time to carry out further research when a statement seems overly exaggerated is an extremely important way of filtering out misinformation online.