This video of a kangaroo at an airport has been going viral on various social media platforms over the past week. In the video, a kangaroo holding a boarding pass stands beside a woman who appears to be arguing with airport staff. These videos have gone extremely viral – with millions of impressions on X, Instagram, and Facebook. Many of the comments and re-shares seem to be taking the video at face value. However, we took a closer look to find out where exactly the video is from.

These videos have gone extremely viral – with millions of impressions on X, Instagram, and Facebook. Many of the comments and re-shares seem to be taking the video at face value. However, we took a closer look to find out where exactly the video is from.

While the video does not show overt signs of digital manipulation (particularly as many iterations do not include audio), we found early versions of the video which seem to feature the women arguing in muffled and distorted voices. Although AI technology has become extremely sophisticated, its audio capabilities are still less than perfect. AI-generated audio (especially spoken words) tends to lack authentic emotion – that is, a lack of coherent tone, pacing, and appropriate emphasis. Many commercially available AI-video tools also tend to produce distorted and glitchy audio.

The audio of this video therefore raised alarm bells for us – further compounded by its earliest iteration being posted on an Instagram page called “Infinite Unreality”

The Instagram account features many videos of animals (from capybaras to elephants) in short videos – some which are overtly unrealistic (for instance, a clip of a human-sized praying mantis). The Infinite Unreality website also explicitly mentions that they produce “unique” AI videos.

Therefore, it appears that the video of the kangaroo which has been shared as a real occurrence is actually AI-generated. No such incident took place and multiple social media accounts have been re-sharing the original video (which made no claims of authenticity) with misleading captions. We give this claim a rating of false.

While perhaps not directly malicious or harmful, this claim is emblematic of a wider wave of highly realistic AI-generated videos sweeping through the online space. The fact that this video was widely shared and accepted as real by millions of users demonstrates how easily susceptible users can be to AI videos. If not addressed and mitigated, this susceptibility could be used in much more serious and damaging ways – for instance to spread targeted disinformation about health, wars, or natural disasters.

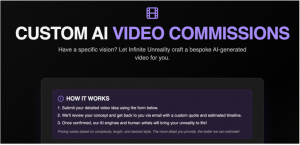

The growing difficulty users have in detecting AI-generated media has been compounded by the rapid advancements and accessibility of AI technology. The creators of the kangaroo video, for instance, offer AI-video services – for as little as $100, anyone can commission them to produce a tailor-made AI video.

Recently released tools such as Google’s AI tool, Veo 3, have been lauded as the best yet – with “shockingly realistic” videos. While many of these tools still cost money to use and can be identified as AI with closer scrutiny, their introduction to the market has caused an influx of AI-generated videos on social media. Many of these videos are not explicitly labelled as AI, adding to the confusion.

What is the cost of users being unable to easily identify an AI-generated video?

While some might not see an issue with cute or funny videos (like in this kangaroo clip), the lack of proper labelling and clarification erodes AI literacy and can be a powerful tool for bad actors looking to disrupt important issues or events such as elections.

As internet users within this environment, being sceptical and willing to cross-check videos and their provenance is extremely important to maintain our awareness of AI-media and protect ourselves against mis/disinformation. While no perfect AI identification tool exists, being aware of the resources available to help identify AI-generated media is one way to build defences against disinformation – for instance lists of characteristics that AI-generated videos tend to have (such as glitchy audio, misshaped hands, uncanny actions, or morphing) and Deepfake scanning software.